Defining your pipeline steps

Pipeline steps are defined in YAML and are either stored in Buildkite or in your repository using a pipeline.yml file.

Defining your pipeline steps in a pipeline.yml file gives you access to more configuration options and environment variables than the web interface, and allows you to version, audit and review your build pipelines alongside your source code.

Getting started

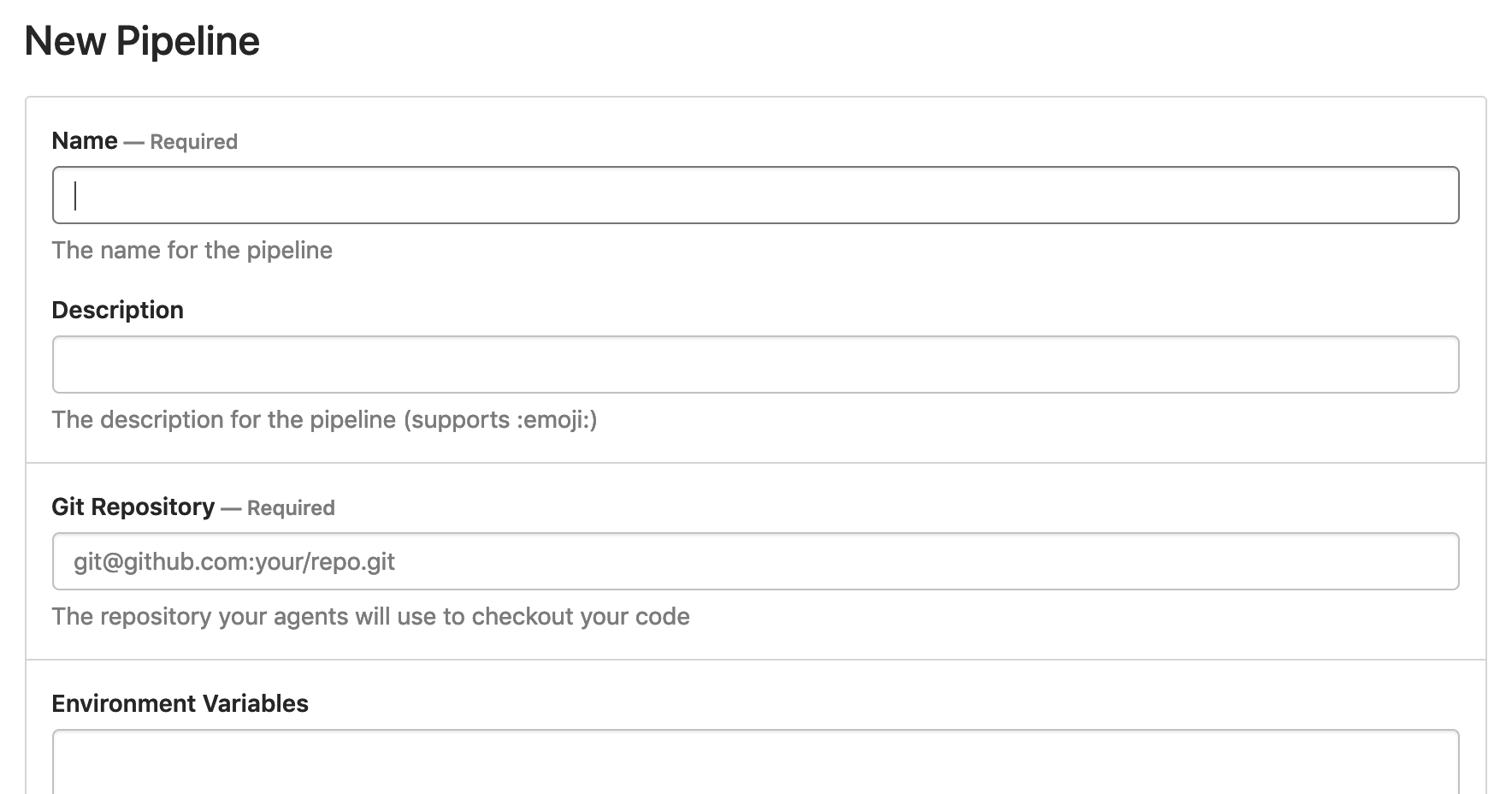

On the Pipelines page, select New pipeline to begin creating a new pipeline.

The required fields are Name and Git Repository.

You can set up webhooks at this point, but this step is optional. These webhook setup instructions can be found in pipeline settings on your specific repository provider page.

Both the REST API and GraphQL API can be used to create a pipeline programmatically. See the Pipelines REST API and the GraphQL API for details and examples.

Adding steps

There are two ways to define steps in your pipeline: using the YAML step editor in Buildkite or with a pipeline.yml file. The web steps visual editor is still available if you haven't migrated to YAML steps but will be deprecated in the future.

If you have not yet migrated to YAML Steps, you can do so on your pipeline's settings page. See the Migrating to YAML steps guide for more information about the changes and the migration process.

However you add steps to your pipeline, keep in mind that steps may run on different agents. It is good practice to install your dependencies in the same step that you run them.

Step defaults

If you're using YAML steps, you can set defaults which will be applied to every command step in a pipeline unless they are overridden by the step itself. You can set default agent properties and default environment variables:

-

agents- A map of agent characteristics such asosorqueuethat restrict what agents the command will run on -

env- A map of environment variables to apply to all steps

Environment variable precedence

Because you can set environment variables in many different places, check environment variable precedence to ensure your environment variables work as expected.

For example, to set steps do-something.sh and do-something-else.sh to use the something queue and the step do-another-thing.sh to use the another queue:

agents:

queue: "something"

steps:

- command: "do-something.sh"

- command: "do-something-else.sh"

- label: "Another"

command: "do-another-thing.sh"

agents:

queue: "another"

YAML steps editor

To add steps using the YAML editor, click the 'Edit Pipeline' button on the Pipeline Settings page.

Starting your YAML with the steps object, you can add as many steps as you require of each different type. Quick reference documentation and examples for each step type can be found in the sidebar on the right.

pipeline.yml file

Before getting started with a pipeline.yml file, you'll need to tell Buildkite where it will be able to find your steps.

In the YAML steps editor in your Buildkite dashboard, add the following YAML:

steps:

- label: ":pipeline: Pipeline upload"

command: buildkite-agent pipeline upload

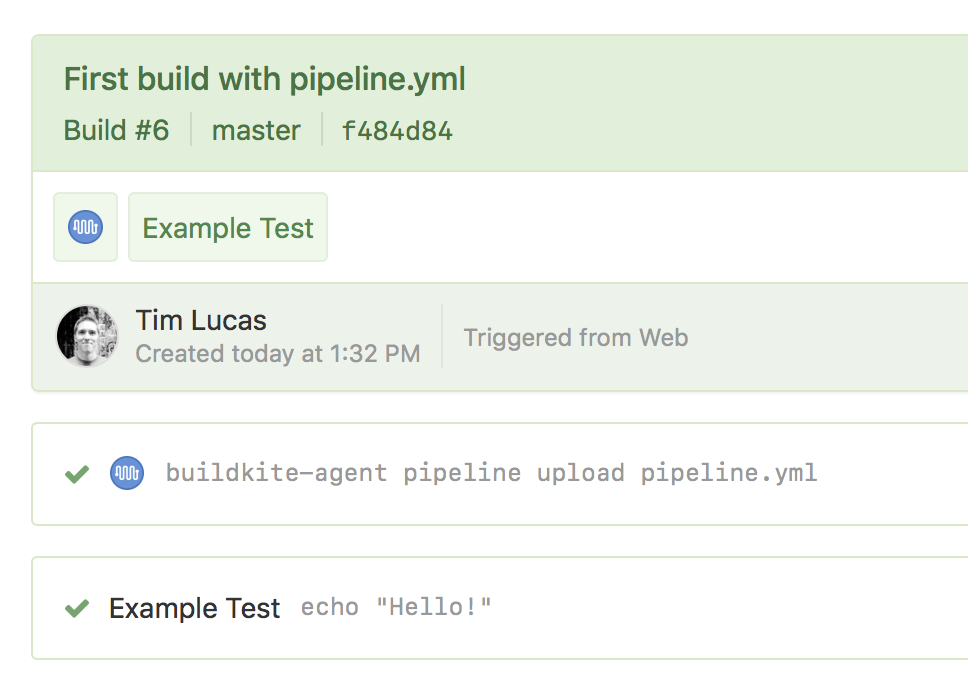

When you eventually run a build from this pipeline, this step will look for a directory called .buildkite containing a file named pipeline.yml. Any steps it finds inside that file will be uploaded to Buildkite and will appear during the build.

When using WSL2 or PowerShell Core, you cannot add a buildkite-agent pipeline upload command step directly in the YAML steps editor. To work around this, there are two options:

* Use the YAML steps editor alone

* Place the buildkite-agent pipeline upload command in a script file. In the YAML steps editor, add a command to run that script file. It will upload your pipeline.

Create your pipeline.yml file in a .buildkite directory in your repo.

If you're using any tools that ignore hidden directories, you can store your pipeline.yml file either in the top level of your repository, or in a non-hidden directory called buildkite. The upload command will search these places if it doesn't find a .buildkite directory.

The following example YAML defines a pipeline with one command step that will echo 'Hello' into your build log:

steps:

- label: "Example Test"

command: echo "Hello!"

With the above example code in a pipeline.yml file, commit and push the file up to your repository. If you have set up webhooks, this will automatically create a new build. You can also create a new build using the 'New Build' button on the pipeline page.

For more example steps and detailed configuration options, see the example pipeline.yml below, or the step type specific documentation:

If your pipeline has more than one step and you have multiple agents available to run them, they will automatically run at the same time. If your steps rely on running in sequence, you can separate them with wait steps. This will ensure that any steps before the 'wait' are completed before steps after the 'wait' are run.

Explicit dependencies in uploaded steps

If a step depends on an upload step, then all steps uploaded by that step become dependencies of the original step. For example, if step B depends on step A, and step A uploads step C, then step B will also depend on step C.

When a step is run by an agent, it will be run with a clean checkout of the pipeline's repository. If your commands or scripts rely on the output from previous steps, you will need to either combine them into a single script or use artifacts to pass data between steps. This enables any step to be picked up by any agent and run steps in parallel to speed up your build.

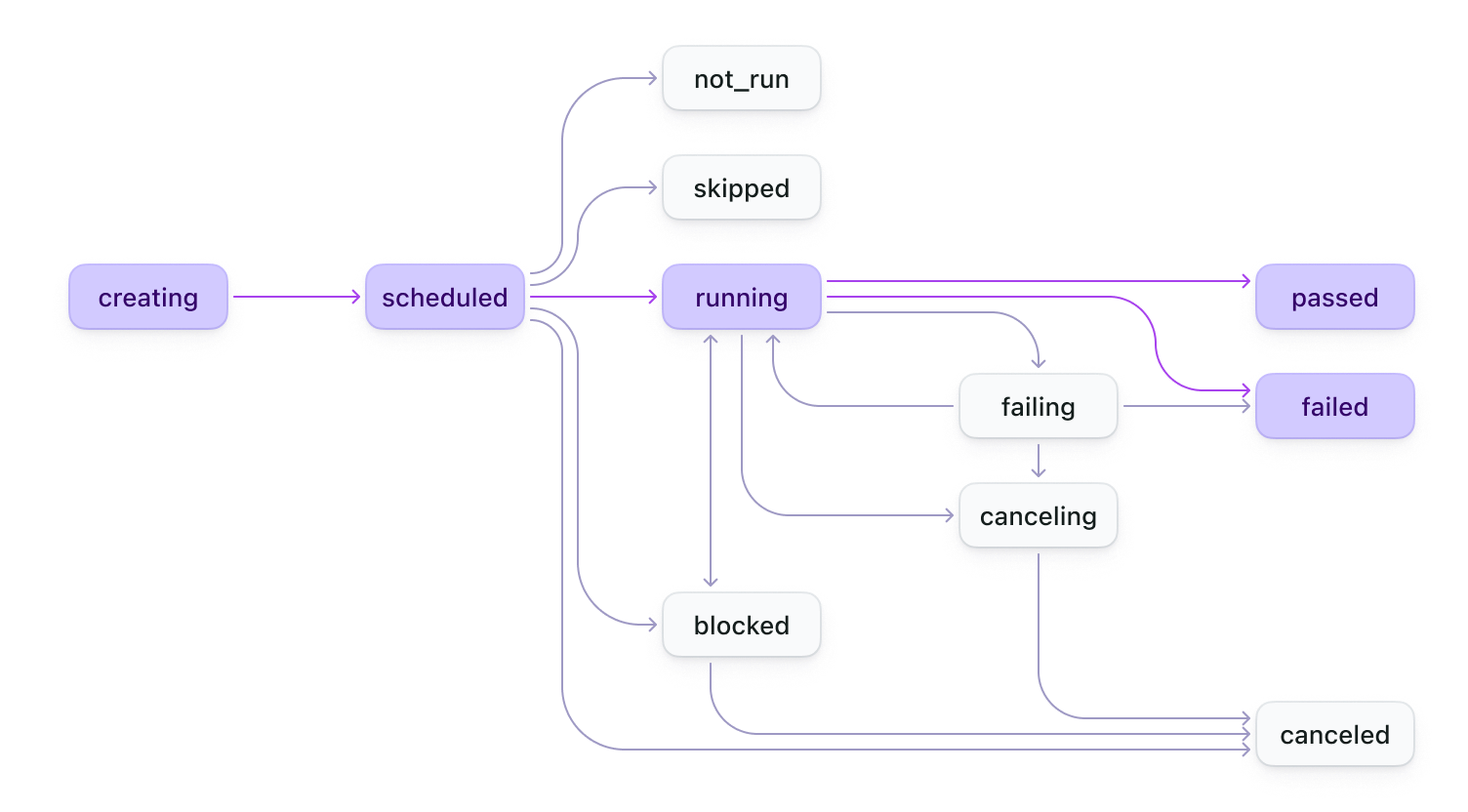

Build states

When you run a pipeline, a build is created. The following diagram shows you how builds progress from start to end.

A build state can be one of of the following values:

creating, scheduled, running, passed, failing, failed, blocked, canceling, canceled, skipped, not_run.

You can query for finished builds to return builds in any of the following states: passed, failed, blocked, or canceled.

When a triggered build fails, the step that triggered it will be stuck in the running state forever.

When all the steps in a build are skipped (either by using skip attribute or by using if condition), the build state will be marked as not_run.

Unlike the notify attribute, the build state value for a steps attribute may differ depending on the state of a pipeline. For example, when a build is blocked within a steps section, the state value in the API response for getting a build retains its last value (for example, passed), rather than having the value blocked, and instead, the response also returns a blocked field with a value of true.

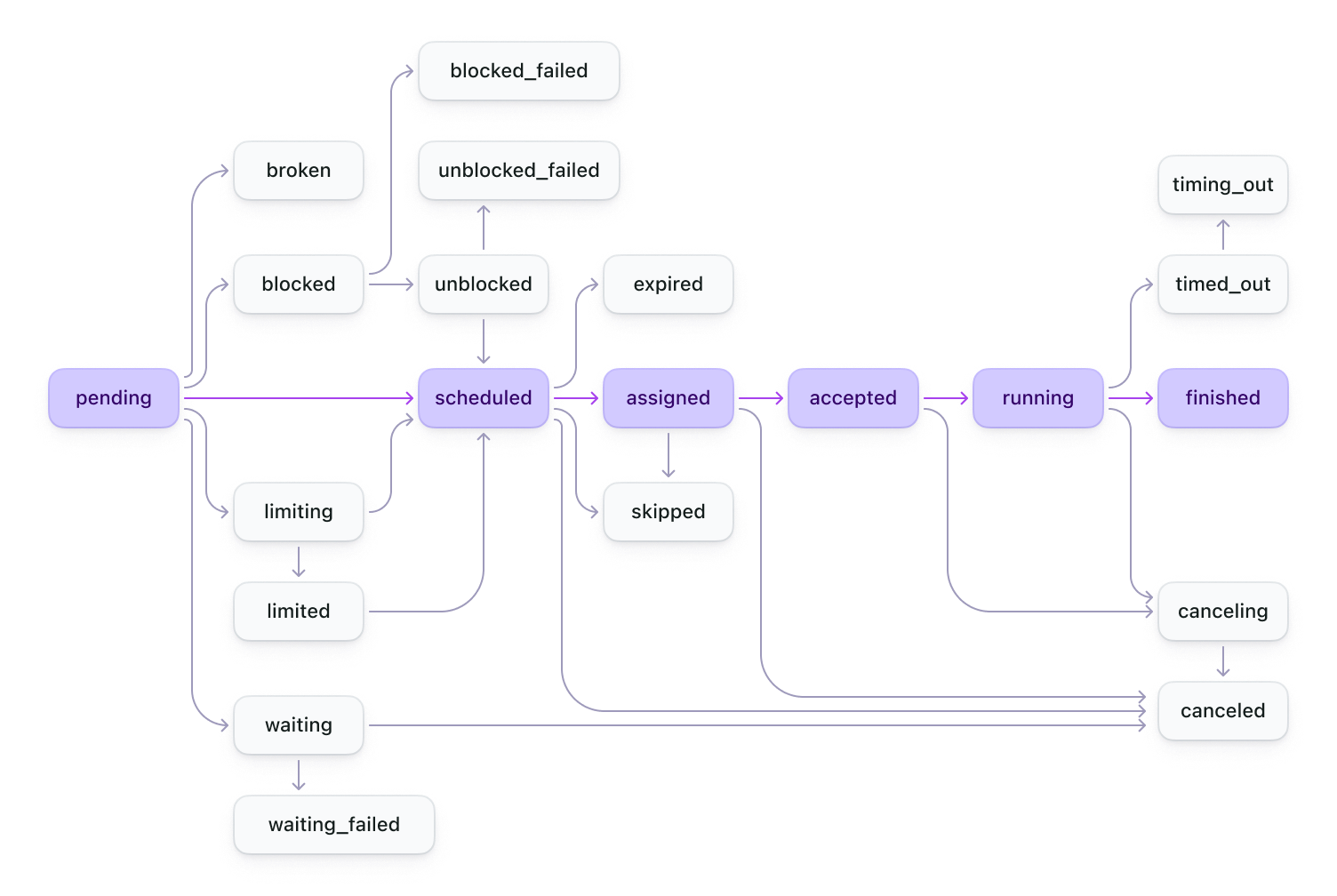

Job states

When you run a pipeline, a build is created. Each of the steps in the pipeline ends up as a job in the build, which then get distributed to available agents. Job states have a similar flow to build states but with a few extra states. The following diagram shows you how jobs progress from start to end.

| Job state | Description |

|---|---|

pending |

The job has just been created and doesn't have a state yet. |

waiting |

The job is waiting on a wait step to finish. |

waiting_failed |

The job was in a waiting state when the build failed. |

blocked |

The job is waiting on a block step to finish. |

blocked_failed |

The job was in a blocked state when the build failed. |

unblocked |

This block job has been manually unblocked. |

unblocked_failed |

This block job was in an unblocked state when the build failed. |

limiting |

The job is waiting on a concurrency group check before becoming either limited or scheduled. |

limited |

The job is waiting for jobs with the same concurrency group to finish. |

scheduled |

The job is scheduled and waiting for an agent. |

assigned |

The job has been assigned to an agent, and it's waiting for it to accept. |

accepted |

The job was accepted by the agent, and now it's waiting to start running. |

running |

The job is running. |

finished |

The job has finished. |

canceling |

The job is currently canceling. |

canceled |

The job was canceled. |

timing_out |

The job is timing out for taking too long. |

timed_out |

The job timed out. |

skipped |

The job was skipped. |

broken |

The jobs configuration means that it can't be run. |

expired |

The job expired before it was started on an agent. |

As well as the states shown in the diagram, the following progressions can occur:

can progress to skipped

|

can progress to canceling or canceled

|

|---|---|

pending |

accepted |

waiting |

pending |

blocked |

limiting |

limiting |

limited |

limited |

blocked |

accepted |

unblocked |

broken |

Differentiating between broken, skipped and canceled states:

- Jobs become

brokenwhen their configuration prevents them from running. This might be because their branch configuration doesn't match the build's branch, or because a conditional returned false. - This is distinct from

skippedjobs, which might happen if a newer build is started and build skipping is enabled. Broadly, jobs break because of something inside the build, and are skipped by something outside the build. - Jobs can be

canceledintentionally, either using the Buildkite UI or one of the APIs.

Differentiating between timing_out, timed_out, and expired states:

- Jobs become

timing_out,timed_outwhen a job starts running on an agent but doesn't complete within the timeout period. - Jobs become

expiredwhen they reach the scheduled job expiry timeout before being picked up by an agent.

See Build timeouts for information about setting timeout values.

The REST API does not return finished, but returns passed or failed according to the exit status of the job. It also lists limiting and limited as scheduled for legacy compatibility.

A job state can be one of the following values:

pending, waiting, waiting_failed, blocked, blocked_failed, unblocked, unblocked_failed, limiting, limited, scheduled, assigned, accepted, running, finished, canceling, canceled, expired, timing_out, timed_out, skipped, or broken.

Each job in a build also has a footer that displays exit status information. It may include an exit signal reason, which indicates whether the Buildkite agent stopped or the job was canceled.

Exit status information available in the GraphQL API but not the REST API.

Example pipeline

Here's a more complete example based on the Buildkite agent's build pipeline. It contains script commands, wait steps, block steps, and automatic artifact uploading:

steps:

- label: ":hammer: Tests"

command: scripts/tests.sh

env:

BUILDKITE_DOCKER_COMPOSE_CONTAINER: app

- wait

- label: ":package: Package"

command: scripts/build-binaries.sh

artifact_paths: "pkg/*"

env:

BUILDKITE_DOCKER_COMPOSE_CONTAINER: app

- wait

- label: ":debian: Publish"

command: scripts/build-debian-packages.sh

artifact_paths: "deb/**/*"

branches: "main"

agents:

queue: "deploy"

- block: ":shipit: Release"

branches: "main"

- label: ":github: Release"

command: scripts/build-github-release.sh

artifact_paths: "releases/**/*"

branches: "main"

- wait

- label: ":whale: Update images"

command: scripts/release-docker.sh

branches: "main"

agents:

queue: "deploy"

Step types

Buildkite pipelines are made up of the following step types:

Customizing the pipeline upload path

By default the pipeline upload step reads your pipeline definition from .buildkite/pipeline.yml in your repository. You can specify a different file path by adding it as the first argument:

steps:

- label: ":pipeline: Pipeline upload"

command: buildkite-agent pipeline upload .buildkite/deploy.yml

A common use for custom file paths is when separating test and deployment steps into two separate pipelines. Both pipeline.yml files are stored in the same repo and both Buildkite pipelines use the same repo URL. For example, your test pipeline's upload command could be:

buildkite-agent pipeline upload .buildkite/pipeline.yml

And your deployment pipeline's upload command could be:

buildkite-agent pipeline upload .buildkite/pipeline.deploy.yml

For a list of all command line options, see the buildkite-agent pipeline upload documentation.

Dynamic pipelines

Because the pipeline upload step runs on your agent machine, you can generate pipelines dynamically using scripts from your source code. This provides you with the flexibility to structure your pipelines however you require.

The following example generates a list of parallel test steps based upon the test/* directory within your repository:

#!/bin/bash

# exit immediately on failure, or if an undefined variable is used

set -eu

# begin the pipeline.yml file

echo "steps:"

# add a new command step to run the tests in each test directory

for test_dir in test/*/; do

echo " - command: \"run_tests "${test_dir}"\""

done

To use this script, you'd save it to .buildkite/pipeline.sh inside your repository, ensure it is executable, and then update your pipeline upload step to use the new script:

.buildkite/pipeline.sh | buildkite-agent pipeline upload

When the build runs, it executes the script and pipes the output to the pipeline upload command. The upload command then inserts the steps from the script into the build immediately after the upload step.

Since the upload command inserts steps immediately after the upload step, they appear in reverse order when you upload multiple steps in one command. To avoid the steps appearing in reverse order, we suggest you upload the steps in reverse order (the step you want to run first goes last). That way, they'll be in the expected order when inserted.

In the below pipeline.yml example, when the build runs it will execute the .buildkite/pipeline.sh script, then the test steps from the script will be added to the build before the wait step and command step. After the test steps have run, the wait and command step will run.

steps:

- command: .buildkite/pipeline.sh | buildkite-agent pipeline upload

label: ":pipeline: Upload"

- wait

- command: "other-script.sh"

label: "Run other operations"

Dynamic pipeline templates

If you need the ability to use pipelines from a central catalog, or enforce certain configuration rules, you can either use dynamic pipelines and the pipeline upload command to make this happen or write custom plugins and share them across your organization.

To use dynamic pipelines and the pipeline upload command, you'd make a pipeline that looks something like this:

steps:

- command: enforce-rules.sh | buildkite-agent pipeline upload

label: ":pipeline: Upload"

Each team defines their steps in team-steps.yml. Your templating logic is in enforce-rules.sh, which can be written in any language that can pass YAML to the pipeline upload.

In enforce-rules.sh you can add steps to the YAML, require certain versions of dependencies or plugins, or implement any other logic you can program. Depending on your use case, you might want to get enforce-rules.sh from an external catalog instead of committing it to the team repository.

See how Hasura.io used dynamic templates and pipelines to replace their YAML configuration with Go and some shell scripts.

Targeting specific agents

To run command steps only on specific agents:

- In the agent configuration file, tag the agent

- In the pipeline command step, set the agent property in the command step

For example to run commands only on agents running on macOS:

steps:

- command: "script.sh"

agents:

os: "macOS"

Further documentation

You can also upload pipelines from the command line using the buildkite-agent command line tool. See the buildkite-agent pipeline documentation for a full list of the available parameters.